Which operating system must be used for control plane machines in Red Hat OpenShift?

Ubuntu

Red Hat Enterprise Linux

Red Hat CoreOS

Centos

Red Hat OpenShift requires specific operating systems for its control plane machines to ensure stability, security, and compatibility. Let’s analyze each option:

A. Ubuntu

Incorrect:

While Ubuntu is a popular Linux distribution, it is not the recommended operating system for OpenShift control plane machines. OpenShift relies on Red Hat-specific operating systems for its infrastructure.

B. Red Hat Enterprise Linux

Incorrect:

Red Hat Enterprise Linux (RHEL) is commonly used for worker nodes in OpenShift clusters. However, control plane machines require a more specialized operating system optimized for Kubernetes workloads.

C. Red Hat CoreOS

Correct:

Red Hat CoreOSis the default operating system for OpenShift control plane machines. It is a lightweight, immutable operating system specifically designed for running containerized workloads in Kubernetes environments. CoreOS ensures consistency, security, and automatic updates.

D. CentOS

Incorrect:

CentOS is a community-supported Linux distribution based on RHEL. While it can be used in some Kubernetes environments, it is not supported for OpenShift control plane machines.

Why Red Hat CoreOS?

Immutable Infrastructure:CoreOS is designed to be immutable, meaning updates are applied automatically and consistently across the cluster.

Optimized for Kubernetes:CoreOS is tailored for Kubernetes workloads, providing a secure and reliable foundation for OpenShift control plane components.

JNCIA Cloud References:

The JNCIA-Cloud certification covers OpenShift architecture, including the operating systems used for control plane and worker nodes. Understanding the role of Red Hat CoreOS is essential for deploying and managing OpenShift clusters effectively.

For example, Juniper Contrail integrates with OpenShift to provide advanced networking features, relying on CoreOS for secure and efficient operation of control plane components.

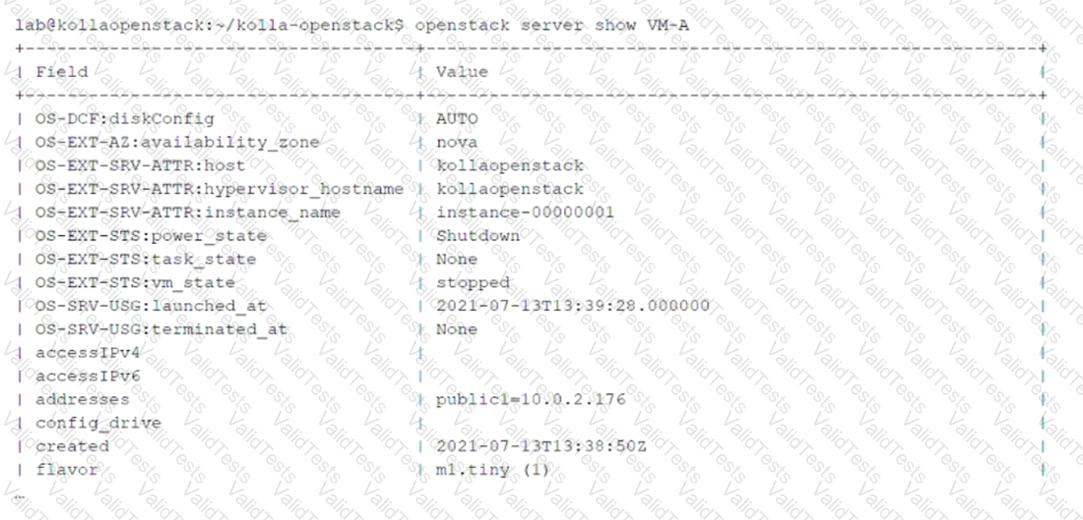

Click the Exhibit button.

You have issued theopenstack server show VM-Acommand and received the output shown in the exhibit.

To which virtual network is the VM-A instance attached?

m1.tiny

public1

Nova

kollaopenstack

Theopenstack server showcommand provides detailed information about a specific virtual machine (VM) instance in OpenStack. The output includes details such as the instance name, network attachments, power state, and more. Let’s analyze the question and options:

Key Information from the Exhibit:

Theaddressesfield in the output shows

public1=10.0.2.176

This indicates that the VM-A instance is attached to the virtual network namedpublic1, with an assigned IP address of10.0.2.176.

Option Analysis:

A. m1.tiny

Incorrect: m1.tinyrefers to the flavor of the VM, which specifies the resource allocation (e.g., CPU, memory, disk). It is unrelated to the virtual network.

B. public1

Correct:Theaddressesfield explicitly states that the VM-A instance is attached to thepublic1virtual network.

C. Nova

Incorrect:Nova is the OpenStack compute service that manages VM instances. It is not a virtual network.

D. kollaopenstack

Incorrect: kollaopenstackappears in the output as the hostname or project name but does not represent a virtual network.

Why public1?

Network Attachment:Theaddressesfield in the output directly identifies the virtual network (public1) to which the VM-A instance is attached.

IP Address Assignment:The IP address (10.0.2.176) confirms that the VM is connected to thepublic1network.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding OpenStack commands and outputs, including theopenstack server showcommand. Recognizing how virtual networks are represented in OpenStack is essential for managing VM connectivity.

For example, Juniper Contrail integrates with OpenStack Neutron to provide advanced networking features for virtual networks likepublic1.

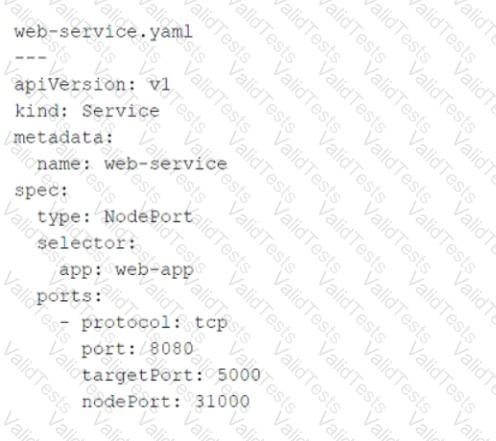

Click the Exhibit button.

Referring to the exhibit, which port number would external users use to access the WEB application?

80

8080

31000

5000

The YAML file provided in the exhibit defines a KubernetesServiceobject of typeNodePort. Let’s break down the key components of the configuration and analyze how external users access the WEB application:

Key Fields in the YAML File:

type: NodePort:

This specifies that the service is exposed on a static port on each node in the cluster. External users can access the service using the node's IP address and the assignednodePort.

port: 8080:

This is the port on which the service is exposed internally within the Kubernetes cluster. Other services or pods within the cluster can communicate with this service using port8080.

targetPort: 5000:

This is the port on which the actual application (WEB application) is running inside the pod. The service forwards traffic fromport: 8080totargetPort: 5000.

nodePort: 31000:

This is the port on the node (host machine) where the service is exposed externally. External users will use this port to access the WEB application.

How External Users Access the WEB Application:

External users access the WEB application using the node's IP address and thenodePortvalue (31000).

The Kubernetes service listens on this port and forwards incoming traffic to the appropriate pods running the WEB application.

Why Not Other Options?

A. 80:Port80is commonly used for HTTP traffic, but it is not specified in the YAML file. The service does not expose port80externally.

B. 8080:Port8080is the internal port used within the Kubernetes cluster. It is not the port exposed to external users.

D. 5000:Port5000is the target port where the application runs inside the pod. It is not directly accessible to external users.

Why 31000?

NodePort Service Type:TheNodePortservice type exposes the application on a high-numbered port (default range: 30000–32767) on each node in the cluster.

External Accessibility:External users must use thenodePortvalue (31000) along with the node's IP address to access the WEB application.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Kubernetes networking concepts, including service types likeClusterIP,NodePort, andLoadBalancer. Understanding howNodePortservices work is essential for exposing applications to external users in Kubernetes environments.

For example, Juniper Contrail integrates with Kubernetes to provide advanced networking features, such as load balancing and network segmentation, for services like the one described in the exhibit.

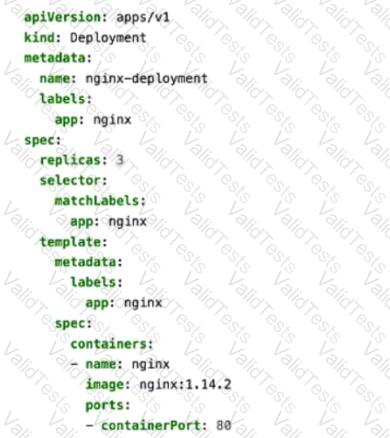

Click the Exhibit button.

You apply the manifest file shown in the exhibit.

Which two statements are correct in this scenario? (Choose two.)

The created pods are receiving traffic on port 80.

This manifest is used to create a deployment.

This manifest is used to create a deploymentConfig.

Four pods are created as a result of applying this manifest.

The provided YAML manifest defines a Kubernetes Deployment object that creates and manages a set of pods running the NGINX web server. Let’s analyze each statement in detail:

A. The created pods are receiving traffic on port 80.

Correct:

The containerPort: 80 field in the manifest specifies that the NGINX container listens on port 80 for incoming traffic.

While this does not expose the pods externally, it ensures that the application inside the pod (NGINX) is configured to receive traffic on port 80.

B. This manifest is used to create a deployment.

Correct:

The kind: Deployment field explicitly indicates that this manifest is used to create a Kubernetes Deployment .

Deployments are used to manage the desired state of pods, including scaling, rolling updates, and self-healing.

C. This manifest is used to create a deploymentConfig.

Incorrect:

deploymentConfig is a concept specific to OpenShift, not standard Kubernetes. In OpenShift, deploymentConfig provides additional features like triggers and lifecycle hooks, but this manifest uses the standard Kubernetes Deployment object.

D. Four pods are created as a result of applying this manifest.

Incorrect:

The replicas: 3 field in the manifest specifies that the Deployment will create three replicas of the NGINX pod. Therefore, only three pods are created, not four.

Why These Statements?

Traffic on Port 80:

The containerPort: 80 field ensures that the NGINX application inside the pod listens on port 80. This is critical for the application to function as a web server.

Deployment Object:

The kind: Deployment field confirms that this manifest creates a Kubernetes Deployment, which manages the lifecycle of the pods.

Replica Count:

The replicas: 3 field explicitly states that three pods will be created. Any assumption of four pods is incorrect.

Additional Context:

Kubernetes Deployments:Deployments are one of the most common Kubernetes objects used to manage stateless applications. They ensure that the desired number of pod replicas is always running and can handle updates or rollbacks seamlessly.

Ports in Kubernetes:The containerPort field in the pod specification defines the port on which thecontainerized application listens. However, to expose the pods externally, a Kubernetes Service (e.g., NodePort, LoadBalancer) must be created.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Kubernetes concepts, including Deployments, Pods, and networking. Understanding how Deployments work and how ports are configured is essential for managing containerized applications in cloud environments.

For example, Juniper Contrail integrates with Kubernetes to provide advanced networking and security features for Deployments like the one described in the exhibit.

Click to the Exhibit button.

Referring to the exhibit, which two statements are correct? (Choose two.)

The myvSRX instance is using a default image.

The myvSRX instance is a part of a default network.

The myvSRX instance is created using a custom flavor.

The myvSRX instance is currently running.

Theopenstack server listcommand provides information about virtual machine (VM) instances in the OpenStack environment. Let’s analyze the exhibit and each statement:

Key Information from the Exhibit:

The output shows details about themyvSRXinstance:

Status: ACTIVE(indicating the instance is running).

Networks: VN-A-10.1.0.3(indicating the instance is part of a specific network).

Image: vSRX3(indicating the instance was created using a custom image).

Flavor: vSRX-Flavor(indicating the instance was created using a custom flavor).

Option Analysis:

A. The myvSRX instance is using a default image.

Incorrect:The image namevSRX3suggests that this is a custom image, not the default image provided by OpenStack.

B. The myvSRX instance is a part of a default network.

Incorrect:The network nameVN-A-10.1.0.3indicates that the instance is part of a specific network, not the default network.

C. The myvSRX instance is created using a custom flavor.

Correct:The flavor namevSRX-Flavorindicates that the instance was created using a custom flavor, which defines the CPU, RAM, and disk space properties.

D. The myvSRX instance is currently running.

Correct:TheACTIVEstatus confirms that the instance is currently running.

Why These Statements?

Custom Flavor:ThevSRX-Flavorname clearly indicates that a custom flavor was used to define the instance's resource allocation.

Running Instance:TheACTIVEstatus confirms that the instance is operational and available for use.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding OpenStack commands and outputs, including theopenstack server listcommand. Recognizing how images, flavors, and statuses are represented is essential for managing VM instances effectively.

For example, Juniper Contrail integrates with OpenStack Nova to provide advanced networking features for VMs, ensuring seamless operation based on their configurations.

Theopenstack user listcommand uses which OpenStack service?

Cinder

Keystone

Nova

Neutron

OpenStack provides various services to manage cloud infrastructure resources, including user management. Let’s analyze each option:

A. Cinder

Incorrect: Cinderis the OpenStack block storage service that provides persistent storage volumes for virtual machines. It is unrelated to managing users.

B. Keystone

Correct: Keystoneis the OpenStack identity service responsible for authentication, authorization, and user management. Theopenstack user listcommand interacts with Keystone to retrieve a list of users in the OpenStack environment.

C. Nova

Incorrect: Novais the OpenStack compute service that manages virtual machine instances. It does not handle user management.

D. Neutron

Incorrect: Neutronis the OpenStack networking service that manages virtual networks, routers, and IP addresses. It is unrelated to user management.

Why Keystone?

Identity Management:Keystone serves as the central identity provider for OpenStack, managing users, roles, and projects.

API Integration:Commands likeopenstack user listrely on Keystone's APIs to query and display user information.

JNCIA Cloud References:

The JNCIA-Cloud certification covers OpenStack services, including Keystone, as part of its cloud infrastructure curriculum. Understanding Keystone’s role in user management is essential for operating OpenStack environments.

For example, Juniper Contrail integrates with OpenStack Keystone to enforce authentication and authorization for network resources.

Which Docker component builds, runs, and distributes Docker containers?

dockerd

docker registry

docker cli

container

Docker is a popular containerization platform that includes several components to manage the lifecycle of containers. Let’s analyze each option:

A. dockerd

Correct: The Docker daemon (dockerd) is the core component responsible for building, running, and distributing Docker containers. It manages Docker objects such as images, containers, networks, and volumes, and handles requests from the Docker CLI or API.

B. docker registry

Incorrect: A Docker registry is a repository for storing and distributing Docker images. While it plays a role in distributing containers, it does not build or run them.

C. docker cli

Incorrect: The Docker CLI (Command Line Interface) is a tool used to interact with the Docker daemon (dockerd). It is not responsible for building, running, or distributing containers but rather sends commands to the daemon.

D. container

Incorrect: A container is an instance of a running application created from a Docker image. It is not a component of Docker but rather the result of the Docker daemon's operations.

Why dockerd?

Central Role: The Docker daemon (dockerd) is the backbone of the Docker platform, managing all aspects of container lifecycle management.

Integration: It interacts with the host operating system and container runtime to execute tasks like building images, starting containers, and managing resources.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Docker as part of its containerization curriculum. Understanding the role of the Docker daemon is essential for managing containerized applications in cloud environments.

For example, Juniper Contrail integrates with Docker to provide advanced networking and security features for containerized workloads, relying on the Docker daemon to manage containers.

Which two statements are correct about Kubernetes resources? (Choose two.)

A ClusterIP type service can only be accessed within a Kubernetes cluster.

A daemonSet ensures that a replica of a pod is running on all nodes.

A deploymentConfig is a Kubernetes resource.

NodePort service exposes the service externally by using a cloud provider load balancer.

Kubernetes resources are the building blocks of Kubernetes clusters, enabling the deployment and management of applications. Let’s analyze each statement:

A. A ClusterIP type service can only be accessed within a Kubernetes cluster.

Correct:

AClusterIPservice is the default type of Kubernetes service. It exposes the service internally within the cluster, assigning it a virtual IP address that is accessible only to other pods or services within the same cluster. External access is not possible with this service type.

B. A daemonSet ensures that a replica of a pod is running on all nodes.

Correct:

AdaemonSetensures that a copy of a specific pod is running on every node in the cluster (or a subset of nodes if specified). This is commonly used for system-level tasks like logging agents or monitoring tools that need to run on all nodes.

C. A deploymentConfig is a Kubernetes resource.

Incorrect:

deploymentConfigis a concept specific to OpenShift, not standard Kubernetes. In Kubernetes, the equivalent resource is called aDeployment, which manages the desired state of pods and ReplicaSets.

D. NodePort service exposes the service externally by using a cloud provider load balancer.

Incorrect:

ANodePortservice exposes the service on a static port on each node in the cluster, allowing external access via the node's IP address and the assigned port. However, it does not use a cloud provider load balancer. TheLoadBalancerservice type is the one that leverages cloud provider load balancers for external access.

Why These Statements?

ClusterIP:Ensures internal-only communication, making it suitable for backend services that do not need external exposure.

DaemonSet:Guarantees that a specific pod runs on all nodes, ensuring consistent functionality across the cluster.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Kubernetes resources and their functionalities, including services, DaemonSets, and Deployments. Understanding these concepts is essential for managing Kubernetes clusters effectively.

For example, Juniper Contrail integrates with Kubernetes to provide advanced networking features for services and DaemonSets, ensuring seamless operation of distributed applications.

Which feature of Linux enables kernel-level isolation of global resources?

ring protection

stack protector

namespaces

shared libraries

Linux provides several mechanisms for isolating resources and ensuring security. Let’s analyze each option:

A. ring protection

Incorrect:Ring protection refers to CPU privilege levels (e.g., Rings 0–3) that control access to system resources. While important for security, it does not provide kernel-level isolation of global resources.

B. stack protector

Incorrect:Stack protector is a compiler feature that helps prevent buffer overflow attacks by adding guard variables to function stacks. It is unrelated to resource isolation.

C. namespaces

Correct:Namespaces are a Linux kernel feature that provideskernel-level isolationof global resources such as process IDs, network interfaces, mount points, and user IDs. Each namespace has its own isolated view of these resources, enabling features like containerization.

D. shared libraries

Incorrect:Shared libraries allow multiple processes to use the same code, reducing memory usage. They do not provide isolation or security.

Why Namespaces?

Resource Isolation:Namespaces isolate processes, networks, and other resources, ensuring that changes in one namespace do not affect others.

Containerization Foundation:Namespaces are a core technology behind containerization platforms like Docker and Kubernetes, enabling lightweight and secure environments.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Linux fundamentals, including namespaces, as part of its containerization curriculum. Understanding namespaces is essential for managing containerized workloads in cloud environments.

For example, Juniper Contrail leverages namespaces to isolate network resources in containerized environments, ensuring secure and efficient operation.

Which type of virtualization provides containerization and uses a microservices architecture?

hardware-assisted virtualization

OS-level virtualization

full virtualization

paravirtualization

Virtualization technologies enable the creation of isolated environments for running applications or services. Let’s analyze each option:

A. hardware-assisted virtualization

Incorrect: Hardware-assisted virtualization (e.g., Intel VT-x, AMD-V) provides support for running full virtual machines (VMs) on physical hardware. It is not related to containerization or microservices architecture.

B. OS-level virtualization

Correct: OS-level virtualization enables containerization , where multiple isolated user-space instances (containers) run on a single operating system kernel. Containers are lightweight and share the host OS kernel, making them ideal for microservices architectures. Examples include Docker and Kubernetes.

C. full virtualization

Incorrect: Full virtualization involves running a complete guest operating system on top of a hypervisor (e.g., VMware ESXi, KVM). While it provides strong isolation, it is not as lightweight or efficient as containerization for microservices.

D. paravirtualization

Incorrect: Paravirtualization involves modifying the guest operating system to communicate directly with the hypervisor. Like full virtualization, it is used for running VMs, not containers.

Why OS-Level Virtualization?

Containerization: OS-level virtualization creates isolated environments (containers) that share the host OS kernel but have their own file systems, libraries, and configurations.

Microservices Architecture: Containers are well-suited for deploying microservices because they are lightweight, portable, and scalable.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding virtualization technologies, including OS-level virtualization. Containerization is a key component of modern cloud-native architectures, enabling efficient deployment of microservices.

For example, Juniper Contrail integrates with Kubernetes to manage containerized workloads in cloud environments. OS-level virtualization is fundamental to this integration.